Week 2A: Powers of the adjacency matrix

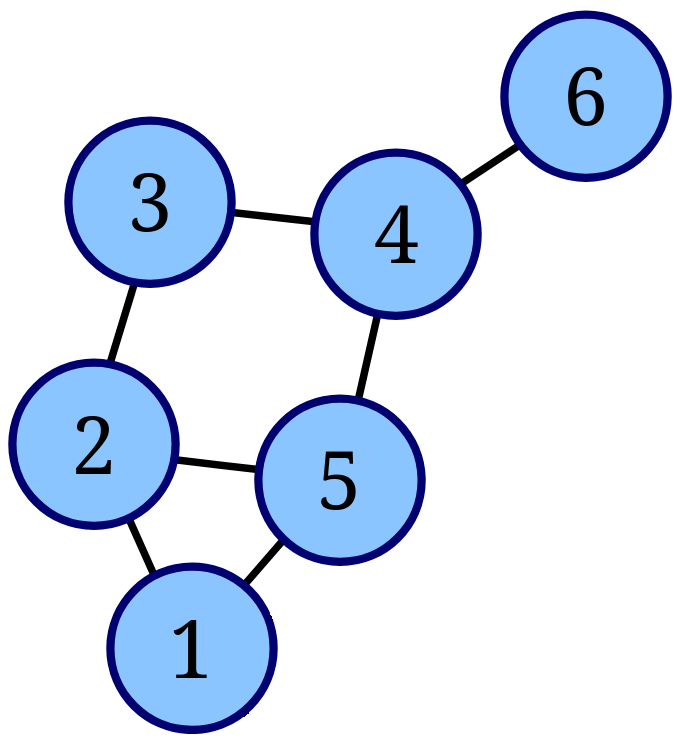

A social media website (like Linked-in) may suggest new friends/connections to connect with. It is possible to use matrix algebra in order to analyze the “proximity” of people in a complex network of connections. Consider an example network below of six people, lines (vertices) are drawn between persons (nodes) to indicate the existing connections.

In a first step, we can characterize this network by its adjacency matrix , a matrix where each element is either 1, if there is a connection between person and or 0, if there is not. As such, the adjacency matrix is a symmetric matrix. For the given graph above, the adjacency matrix is,

Put differently, $ gives the number of paths of length 1 vertex between two persons. Lets say we broaden our scope and look at the number of paths between two persons that have a length of two vertices. This would mean counting the number of persons with which each of them has a connection of length 1. Say we want find out how many paths there are of length 2 between node 2 and 4 we can inspect the second and fourth row of the matrix,

The 3rd and 5th column have 1s on both rows, indicating a shared one-vertex connection, and thus there are two a possible paths from node 2 to 4 over two vertices (via node 3 or via node 5). Given the symmetry of the matrix we could have also compared the 2nd row to the 4th column instead of the 4th row.

You can now see that finding the number of 2-vertex paths is nothing but a row-column multiplication! As such, we can multiply with itself to get , whose entries list the number of two-vertex connections between two nodes.

We can repeat the same argument for the 3-vertex connections encoded in . For the given graph it is,

We see that this matrix is has non-zero diagonal entries. This corresponds to paths from a node to itself via three vertices. Such triangular paths always come in multiples of 6: For each node in a triangle there is a left and a right oriented path traversal. As such, we can sum the number of entries on the diagonal and divide by six to get the number of triangles in the netowrk. Using the trace () we can write for the number of triangles in a network (),

Thank you matrix algebra! for this neat fact.

Week 2A: Polynomial-line fitting revisited

Consider three data points with specific coordinates but with variable values given by and . We can again try to fit a parabolic function,

The matrix equation is,

or in short-hand notation,

We can rewrite the equation by left-multiplication of the the matrix inverse,

Substituting the coefficients gives,

Instead of doing the Gaussian elimination procedure for every column vector , we plot find the polynomial coefficients () using a simple matrix multiplication. We can apply this in Octave to generate the frames of the following movie.

A = [[1,-1,1];[0,0,1];[1,1,1]];

Ai = inv(A);

for i = 1:200

t = 2.*pi*i/200

y = [sin(t - 1); cos(t + 1); sin(2*t)];

a = Ai*y;

clf

fplot (@(x) polyval(a, x), [-1.1, 1.1], 'linewidth', 2, 'red');

hold on

scatter ([-1, 0, 1], y, 'filled')

endFinite difference

If we (can) assume that the data is part of some continuous signal (f(x)), it turns out to be quite OK to guess that locally this function can be approximated with a polynomial. Say we wish to find the second derivative at we can differentiate our polynomial,

By reading the matrix equation we can thus conclude that:

The process of using point-location values and estimating derivatives is part of the finite difference method, which is used for all kinds of numerical models in science and engineering.

Week 2B: The vector product (cross product)

Consider two vectors expressed with a Cartesian basis, and . We can write their vector product as the determinant of a carefully constructed matrix,

Thank you determinant for the convenient mnemonic!