Dust filtration

In the past months I have done a few small woodworking projects in my garage workshop. Despite my best efforts of vacuuming the dust during the various dust-producing routines, everything in my garage is now covered by a layer of fine dust. Since I plan to do more of these projects, I want to get the dust out of the air before it settles. As such, I bought a used kitchen extractor hood and two bags-style filters (fine and coarse). It was all mounted in between the roof beams. I hope, the dust will be gone, whilst retaining the heat.

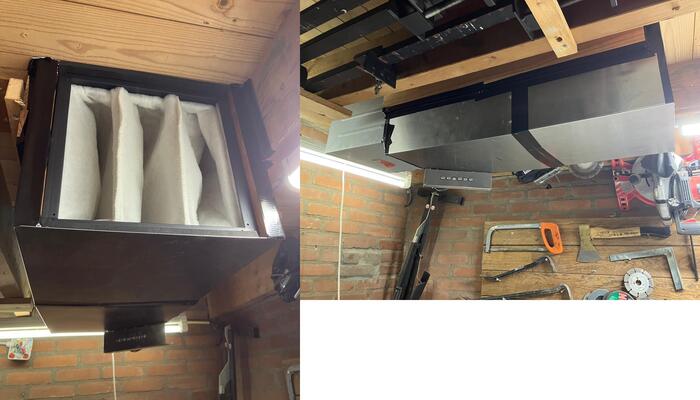

You can see the design in the pictures below.

The blower and the fine-filter stage (above), and the final assembly: The blower, fine-filter and coarse filter. The buttons for the blower power-mode is also mounted. Click for enlargements.

Split-solver dual-grid AMR

I recently learned how to couple two Basilisk solvers, each running with separated adaptive mesh refinement (AMR). This is interesting as it allows to choose an optimal grid for the various solver steps and fields. Consider, for example, the so-called “vorticity-streamfunction” () formulation of the Euler equations for fluid flow in two dimensions. It consist of two equations: First, the advection of vorticity,

And second, the (Poisson equation) relation between and ,

The time-integration of the first equation requires a representation of the fields and such that the gradients can be accurately estimated. Whereas solving the (potentially expensive) linear system only involves estimates for the gradient of the streamfunction . As such, we can split these two steps over two adaptive meshes, where a fine mesh is tasked with time integration of the advection problem and a coarse mesh is employed to solve the Poisson-equation problem. The results are shown below.

Battery management system

After a wind gust had blown over our cargo bike, the padel-assist system did not function anymore due to a damaged battery. After some electrical-engineering investigations to my best capabilities, I decided to “hot wire” the battery-management system (BMS) by soldering on a piece of washing line (see figure below, left panel). It worked very well for one charge before it would stop charging a drained battery.

After a few exhausting trips without the padel assist (e.g. in above figure, right panel) I decided to order a new BMS. One soldering evening later, the battery was good to go again. Despite the new BMS install causing an wiring mess (see below figure, left panel), the functioning pedal assist means that we can go up small inclines once again (see figure below, right panel). Images are links to enlargements.

Hadamard vector product

Given a vector , and an other vector we can define a component-wise (Hadamard) product , by,

Indeed,

Euclidian vectors equipped with such a binanary operation would form an Abelian group and should be less controversial than the scalar/dot/inner product or vector/cross product. In order to investigate why it is not popular we look at its geometric properties:

- The “length” of the product is equal to the inner product: .

- The identity element breaks the symmetry between negative and positive directions …

- … Hence, the product does not retain its orientation under rotation.

- Its direction … ?

Lets see if we can get inspiration from some examples:

The direction of the product is not very intuitive.

Jargon in linear algebra

During my Bsc. studies, I found that the cryptic names of the various mathematical concepts made grasping them (as a Dutch person) harder than it should have been. As I am now teaching linear algebra, I wish to alleviate this problem by explaining the relation of these names with their associated concepts to the students. Here is a list of what I found.

A womb can be viewed as a container for its contents, similar(?) to how a rectangular array of numbers can be contained in a algebraic object. As such, the Latin and (old) French word for womb is used to denote a Matrix1.

When doing row reduction/Gaussian elimination, the row sweeping operation revolves around a non-zero entity that is selected as a reference to guide the row subtractions. An center of an axle that is a central entity around which stuff revolves is also called a pivot location.

Some advantages to positioning military units on a battle field in a line or in a frontal formation may combined by placing them in as staggered, so-called echelon form. A similar stair-case like arrangements is made by the pivot locations of a matrix after Gaussian elimination has taken place. The word echelon in turn stems from a French word for ladder (“échelle”).

The word homogeneous refers to things begin “the same”. The right-most column of the augmented matrix for the homogeneous equations is always the same (a column of zeros) and remains as such, even after elementary row operations have been applied.

The central entity of an object may be called a kernel. Since every vector in a vector space is accompanied by a vector in the opposite direction, the “center” of mass of the range of a transformation () may be said the be the zero vector (). As such we say that the kernel () is mapped there.

One may carry something from one position to another. The displacement entity is then a carrier. This displacement is often denoted with a vector, the Latin word for carrier.

An invertible matrix can be paired with its inverse . Note that is also the inverse of . A matrix that is not invertible, cannot be paired an therefore remains alone. We therefore say that this is a singular matrix.

When a criminal is caught via the hits he/she left behind on the scene, these hints can be said to have made a trail that led to the perpetrator. Such a trail can also “follow” a matrix , of particular interest is the “similarity” transformation () via an invertible matrix defined as . A prime example of such a matrix trail is therefore called the trace of a matrix, which does not change even tough generally .

The length () of a vector () can be expressed using the inner product (). H. Grassmann was the first to study this product of a vector with itself before it was applied as a product of two different vectors. He therefore found this product was inward-looking/intovert, and named it as such (albeit in German).

In a linear transformation, there may exist characteristic vectors that are only scaled by a certain characteristic value. These values may be found as the root of the characteristic polynomial. Since they are so characteristic, they belong to the transformation. A loose German (and Dutch) translation of something that “belongs” to you is “eigen”, giving rise to the eigenvectors and eigenvalues2.

The singular value decomposition (SVD) of a matrix () places singular values () in a diagonal matrix. These values are such that is a singular matrix (c.f. eigen values).